Quantum computing: beyond the hype

Quantum computers are the most popular example of the new quantum technologies predicted to revolutionize the 21st century.

Their development would mark a great advance over the old computers. The latter use ‘bits’, either 0 or 1, as their basic unit of data, which they create and store in very long strings of data. However, quantum computers use ‘quantum bits’ or ‘qubits’, which can be both 0 and 1 at the same time. This allows them to create and store more data, and do calculations faster than an old computer.

The speed at which a quantum computer operates, and its ability to perform many other tasks, can have surprising applications, good or bad. But there is a problem. Building quantum computers appears to be a challenge, leading to increasing claims that they are overpowered.

So is the bubble about to burst? Skeptics keep pointing out that quantum computing – like nuclear fusion – always seems 10 years away. Optimism has been replaced by pessimism, and some are now talking about the winter of many’.

But while this new found truth is welcome, it may be too far. The public face of quantum computing has indeed been one of incomparable laughter. But the independent face of educational research has shown remarkable progress in building practical tools that work.

Quantum information processing has been rediscovered many times for various reasons. Richard Feynman wanted to simulate physics with computers, after deciding that ‘nature is not old, dammit’. David Deutsch wanted to use a quantum computer to prove the existence of a parallel universe. And Stephen Wiesner invented what later became quantum cryptography, but was so disillusioned with its reception that he went back to growing vegetables.

These early proposals for a quantum computer were met with great skepticism, and many experts believe that building a quantum computer is impossible. The main reason given is that like all devices, computers have flaws. For older computers, such errors are stored in the debugger. Unfortunately these primitive codes were not used with quantum devices. Quantum-computing skeptics believe that it will therefore be necessary to create error-free quantum devices.

This problem was solved by Peter Shor and Andrew Steane, who independently discovered quantum error correction in the mid-1990s. Error Result Zoo currently displays over 300 quantum codes, optimized for different situations. This means that quantum computers don’t have to be perfect, but they still have to be very good, and building them is still a challenge.

Multi-bit quantum computers have been demonstrated for decades, and simple devices can be bought as desktop toys, but these are very much toys. Another early breakthrough was using Peter Shor’s quantum-factoring algorithm to find the prime factor of 15, which is not a hard problem.

A functional quantum computer requires large numbers (thousands to millions) of high quality quantum bits (error rates under one part in a thousand, typically around one part as one in a million). Both goals are difficult, and achieving them simultaneously is even more difficult. The obvious strategy is to solve the problem in two stages, but which should be solved first? Here, industrial and academic approaches are different.

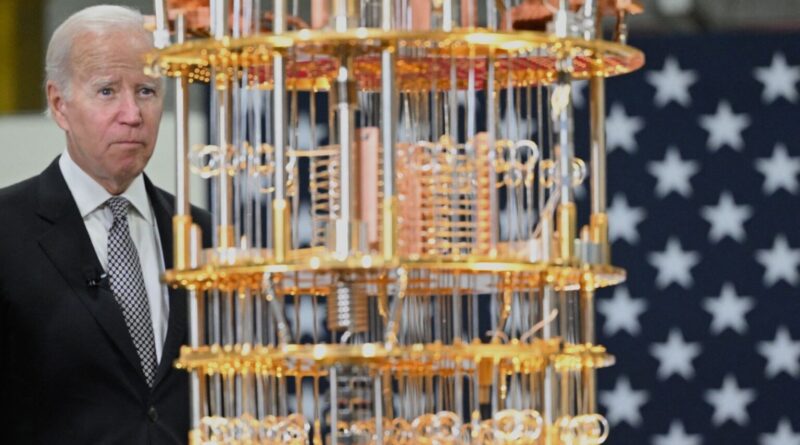

Major industry efforts, led by the likes of Google and IBM, have focused heavily on superconducting technologies. These are suitable for building a large number of quantum bits, using common methods from other nanotechnologies. However these quantum bits are usually of low quality, and the devices are often called Noisy Intermediate Scale Quantum (NISQ) computers, to distinguish them from true quantum computers.

Whether these NISQ devices are relevant is still controversial, and most of the quantum hype claims are related to this question. Claims are often made that NISQ devices have reached quantum heights – that is, that they have performed calculations thought to be beyond the power of any conventional computer. But these requests are always clarified by the people who make them normal simulation usage old computer.

Academics, and academic spinout companies like IonQ, have taken a different approach. They pursue quality over quantity, believing that it is easier to add to a good, small operation than to improve the quality of an unreliable pile. Many of these efforts have involved trapped ion technology, building on experience gained in the development of atomic clocks. A few years ago, these tests reached the ‘fault tolerance limit’, which means they are good enough to start the next stage. Raising them is still a big problem, but there are no fundamental reasons why this cannot be done.

This breakthrough with trapped ions has led to renewed interest in the study, and some believe that quantum computers may be just a decade away. But a third method, based on trapped atoms rather than trapped ions, has recently been developed. This approach shows remarkable promise.

Trapping and transporting weakly interacting atoms is more complicated than doing the same for strongly interacting charged ions. But these limited partnerships bring their own benefits. In March 2024, Caltech researchers reported capturing and manipulating more than 6,000 atoms, using techniques adapted from optical tweezers, and creating simple strategies with the precision needed for quantum computing. . Although several important requirements are still missing, this rapid development means that there is now a clear third player in the quantum game.

It’s too early to tell the future of computing. But perhaps, the quantum year is in the air.

Jonathan Jones is a professor of Physics, working within the Department of Atomic and Laser Physics on Nuclear Magnetic Resonance (NMR) Quantum Computation at the University of Oxford.

#Quantum #computing #hype